By David Scheler, Computational Linguist

NLP Corpus Consistency and Accuracy

The annotated linguistic corpora used to train natural language processing (NLP) models are often imperfect. This is somewhat inevitable. Corpora are sometimes partially or totally annotated by automated means, which can lead to mistakes due to their probabilistic nature and/or imperfect learned representations. But normally they’re annotated by people with varying degrees of proficiency and training, and even well-trained human annotators make mistakes and/or are sometimes inconsistent. Sometimes there is a hybrid effort between these two techniques, where human annotators check and correct the results of automatic labeling; in this process too, a human annotator may sometimes miss errors from the automatic labeler or introduce new errors of their own.

In addition, almost all large corpora are annotated by multiple people, so even with strict guidelines given to the annotators, we can expect some inconsistencies as to annotation conventions. A single annotator can also sometimes exhibit inconsistencies in their own annotations as that individual proceeds with annotating many items.

Inevitable though these flaws may be, the more accurate and the more consistent a corpus is, the more useful it is for training an NLP model. NLP models learn patterns present in the data, so the more robustly the desired patterns are present in the data, the more robustly the model will learn them. A few errors here and there can be overcome, as the model will learn the patterns exhibited by the majority of the data. However, if the underlying exhibits enough inconsistencies with respect to a given pattern in the data, then the model can be expected to exhibit those same inconsistencies when making predictions on new data.

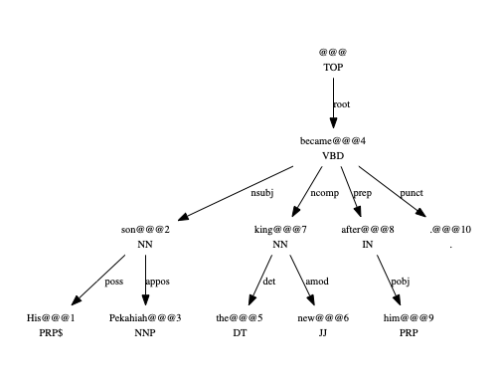

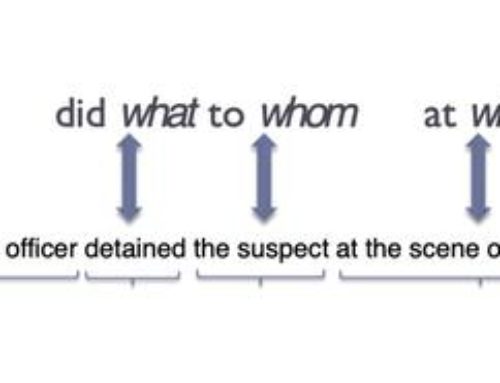

For these reasons, we took a look at the corpora we used to train some of our NLP tools, and decided to do some revisions. Specifically, we made revisions to the corpora for part-of-speech tagging, dependency parsing, and to a more limited extent the one for semantic role labeling. (See this article for discussion of the more limited revisions to the semantic role labeling data.) For further discussion of the various pros and cons of revising corpus data, see Manning 2011.

There are two major types of cases where we revised an annotation in the NLP corpora I discuss here. One is obvious annotator errors, and the other is corpus inconsistency.

By obvious annotator errors, I refer to cases where no reasonable convention would annotate the item the way it appears in the corpus, and the annotator themself would almost certainly revise the annotation on closer inspection.

Corpus inconsistency, by contrast, refers to cases where, due to the complexity of the data or ambiguity in the annotation guidelines, various different annotations could reasonably apply to a given item. However, different items which exhibit the same basic pattern are not given the same annotation in the corpus.

I give here a brief example of each of these types of flaws in the corpus, and the corrections that we make, as applies to the part-of-speech tagger. Similar corrections were made to the dependency parsing corpus, while different types of changes apply to the semantic role labeling corpus; see this article for details. Sentences are given as tokenized strings. In these examples, tokens whose part-of-speech tags were changed in the revision are given in boldface.

An example of an obvious annotator error is as in (1). In (1a), the word ‘bore’ is incorrectly given the verbal tag ‘VBD’. In this sentence, ‘bore’ clearly acts as a noun, as part of the compound noun phrase “term limits bore”; therefore we corrected the tag to the noun tag ‘NN’.

(1) He ’s a term limits bore .

a. He/PRP ’s/VBZ a/DT term/NN limits/NNS bore/VBD ./. (original)

b. He/PRP ’s/VBZ a/DT term/NN limits/NNS bore/NN ./. (corrected)

On the other hand, an example of corpus inconsistency is shown in (2) and (3). The words in the phrase “kind of” are tagged as both adverbs (the tag ‘RB’) in (2a), but in a very similar context they are tagged as a noun followed by a preposition (the tags ‘NN’ and ‘IN’ respectively) in (3a). (Note that in the corpus, the stray quotation mark in (3) appears as a sequence of two straight quotes; it appears here as an opening double quotation mark due to typological limitations.)

(2) Yeah , that ‘s kind of bad .

a. Yeah/UH ,/, that/DT ’s/VBZ kind/RB of/RB bad/JJ ./. (original)

b. Yeah/UH ,/, that/DT ’s/VBZ kind/NN of/IN bad/JJ ./. (corrected, by convention)

(3) I feel kind of bad about it “ .

a. I/PRP feel/VBP kind/NN of/IN bad/JJ about/IN it/PRP “/“ ./. (original, correct as is by convention)

In these examples, there are no obvious annotator errors. Due to the idiomatic and fixed nature of the phrase “kind of” in the sense of ‘somewhat’ as used here, it is not obvious by all reasonable annotation conventions whether the phrase “kind of” should be tagged as “kind/RB of/RB”, or as “kind/NN of/IN”, or by some other sequence of tags.

Instead, a single, perhaps partially arbitrary, decision must be made as to which tags to use for the phrase, and that decision must be applied to all cases of the phrase “kind of” with this meaning and in this type of context. But as can be seen from the contrast between (2a) and (3a), that degree of consistency was not achieved in the original corpus.

In our revision, we chose the tagging convention “kind/NN of/IN” to apply to this phrase in the relevant cases. We therefore corrected (2a) to (2b), and made similar corrections in as many such cases as we could find in the corpus.

We searched the POS-tagging and parsing corpora for various errors and inconsistencies similar to those discussed here. We investigated the corpora via various search criteria, and corrected relevant examples partly by hand, and partly using automated tools. In this way, we believe that the resulting corpora we used to train our new NLP models are more correct and more consistent, leading to better models and more accurate NLP tools.

References

Manning, C. D. (2011). Part-of-speech tagging from 97% to 100%: is it time for some linguistics? In International conference on intelligent text processing and computational linguistics, pages 171–189. Springer.