By Paulo Malvar, Chief Computational Linguist

Introduction

To me 2020 was not only the year of the pandemic. It was also the year of hate speech. Please, let me explain. By the end of 2019, I was having internal conversations with my coworkers outlining why I thought Codeq needed to develop an abuse detection module to strengthen its API by offering a solution to help community builders moderate conversations within the online communities they have nurtured.

That was my initial goal. I knew the importance of such a module for community builders given that performing that type of task is very time consuming, and being able to do that, in an automatic or computer-aided fashion, was valuable. As I pointed out on a previous blog post:

“[…] aggressive and/or hateful posts can disrupt otherwise respectful discussions. Such posts are called “toxic”, because they poison a conversation so that other users abandon it.” (Risch & Krestel, 2018: 150)

But then January 2020 hit us with an increasing number of confusing reports telling us about a virus, which seemed to have originated in China and that was starting to spread all over the world. By late January, January 31st to be precise, a travel ban on foreign nationals coming from China to the US was enacted.

During February 2020 the “issue” escalated rapidly and it started becoming apparent how acute the seriousness of the situation was.

Finally in mid March many of us understood that there was no escape from this. This probably happened the day the NBA suspended its season (March 11th 2020). All hell broke loose.

Political rhetoric changed sharply when compared to the previous months. In March 16th 2020, the President of the United States referred to the novel coronavirus as the “Chinese virus”.

If from late January to late February there had been a few thousand racially motivated incidents against Asian Americans, between March 18th and March 26th 2020, there were 650 direct reports of aggressions against Asian Americans. By April 15th 2020 this figure increased to 1497. (source: Wikipedia, 2020)

This is a clear example of how serious things can become when verbal abuse transmutes into hate speech, as “this dangerous manifestation of verbal abuse is a poison that rots implicit and explicit social contracts and norms and ultimately inspires and incites violence against certain groups.” (Malvar, 2020)

As a matter of fact, this was a text book example of what extensive research, as Burnap & Williams (2014) point out, has demonstrated: “crimes entailing a prejudicial motive often occur in close temporal proximity to galvanizing events” (idem, 1).

In this case, the galvanizing event was the perceived aggression by an Asian country against the United States in the form of a global pandemic. But the trigger that accelerated the expressions of violence (verbal and/or physical) against a certain group of the population was the official stamp of approval crystalized in the denomination of the aggression: “Chinese virus”. From that point on it was unambiguously clear who was to blame and who needed to be targeted.

WARNING: Reader discretion is advised as potentially harmful and/or distressing racist content as well as racial slurs are showcased below.

fuck bat eaters!!! that’s disgusting and i hate china for bringing covid

you fucking ching chong bat eater

go back to your communist country you rice eater

Then at the end of May, George Floyd was horribly murdered in Minneapolis. Protests ensued and quickly escalated into violent confrontations with police and other law enforcement agencies.

Social media was flooded with commentaries about what we all were witnessing. Again, a galvanizing event happened and the target of the collective hate rapidly shifted from Asian to African Americans.

During the summer of 2020, while this story developed I was still working on collecting data for Codeq’s abuse detection module. We decided to scour Twitter using a collection of key words and hashtags. Bingo! Polarizing hate speech and outright racist comments were in abundance.

WTF is up with these black animals??

A BLM Lady said looting is reparations! They’re all terrorists & criminals!

The BLM thugs are getting out of control.

you’re supporting the goddamn niggers

I spent countless hours going through vast amounts of data, cleaning and categorizing it. The summer of 2020 was exhausting, physically and emotionally.

Finally, Fall 2020 came and with it the US presidential election. I was still working on polishing Codeq’s abuse classifier so I decided to do another round of data collection to enrich the training corpus.

I went back again to Twitter and my searches revealed how the laser-focused hate had transmuted into an amalgam of hateful commentaries that brought all the usual suspects together in the perfect hate salad.

CCP demorats are the terrorists along w media social media Hollywood sports their leader is Obama funded by soros

Funny how the China Virus, ANTIFA, BLM and all of the DOMESTIC TERRORISM came during the election year!

This evil snake is Muslim Hussein Obama & the Muslim Brotherhood’s plant in Washington, just like Muslim Hussein Obama was a plant for The NWO Globalist Socialist Elitist demoKKKrats

Codeq’s Hate Speech/Racism Twitter Bot: Codeq Spotlight

Words matter. They shape the mental framework we use to look at the world and tell others what that framework is.

I had a realization last year when I was writing my first post on online abuse behavior.

“I realized that, whereas in the early 2000’s and 2010’s there were some papers scattered here and there, there was a clear uptick in the number of papers published that started right around 2015 and 2016 […]

Moreover, since 2017 the number of conferences and workshops dedicated to researching abusive online behavior has been growing. In 2017 the First Workshop on Abusive Language Online took place in Vancouver, in 2018 First Workshop on Trolling, Aggression and Cyberbullying, and in 2019 the 13th International Workshop on Semantic Evaluation hosted a Shared Task on Multilingual Detection of Hate (HatEval).” (Malvar, idem)

If one wants to find hate speech online directed towards a variety of groups of people it is not that hard. It’s there in our face: online, on television, on printed media…

Twitter is no exception to this.

At Codeq we’ve built a bot that scours Twitter and classifies tweets according to whether they contain some kind of abusive verbal content. But specifically, it looks for instances of hate speech or racism.

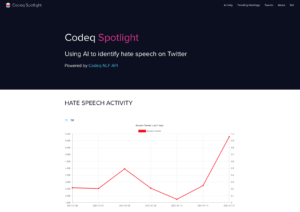

We want to make this visible because we believe it is necessary to cast a spotlight on this dangerous phenomenon. To do precisely this, we have built a platform, Codeq Spotlight, that uses Codeq’s abuse detection module to categorize tweets into different types of verbal abuse.

Codeq Spotlight features two public-facing components. The first component is a Twitter account that periodically posts updates on the volume of hate speech detected during a particular period of time or what hashtags are associated with large volumes of hate speech.

Codeq Spotlight Twitter Bot

The second component is a support website where we keep up-to-date activity on detected hate speech on Twitter.

Codeq Spotlight activity section

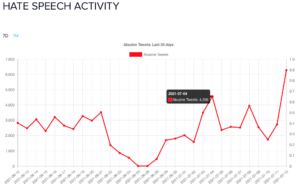

This support website displays charts that showcase the evolution of the aggregated volume of hate speech content detected on Twitter over predefined periods of time.

Codeq Spotlight one month activity chart

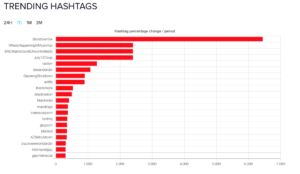

The support website also features a section for Trending Hashtags that shows hashtags that have been identified as having a positively increasing rate of change in the number of instances associated with detected occurrences of hate speech.

Codeq Spotlight 7-day Trending Hashtags

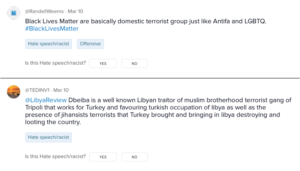

Lastly, Codeq Spotlight’s support website also presents a live list of tweets presumed to contain hate speech material that give the community an opportunity to give us feedback and let us know if particular tweets have been misclassified. This feedback is crucial and helps us improve our classifier over time to more accurately detect instances of this dangerous online phenomenon.

Codeq Spotlight feedback section

Final Words

Combating the proliferation of hate speech/racist comments online as well as in the real world is a community effort.

At Codeq we have built Codeq Spotlight to detect, analyze and bring attention to this type of dangerous behavior to help the community confront it.

If you would like to contribute to this project, please visit our feedback section that showcases instances of hate speech and give us feedback.

If you’re a developer or content creator and would like to explore more our abuse detection module we invite you to give it a try. For more information about this and other tools included in Codeq NLP API, please visit our website.

References

Burnap, P., & Williams, M. L. (2014). “Hate speech, machine classification and statistical modeling of information flows on twitter: Interpretation and communication for policy decision making”. Presented at the Proceedings of the Internet, Politics, and Policy conference.

Malvar, P. (2020). “Detecting Abuse Online”. https://codeq.com/detecting_abuse_online. Last accessed January 5th 2021.

Risch, J., Krestel, R. (2018). “Aggression Identification Using Deep Learning and Data Augmentation”. In Proceedings of the First Workshop on Trolling, Aggression and Cyberbullying. pp. 150–158.

Wikipedia (2020). “List of incidents of xenophobia and racism related to the COVID-19 pandemic”. https://en.wikipedia.org/wiki/List_of_incidents_of_xenophobia_and_racism_related_to_the_COVID-19_pandemic#cite_note-:0-278. Last accessed January 5th 2021.