By Paulo Malvar, Chief Computational Linguist

Sixteen years after it was originally coined (vid van Rijmenam, 2013), the term “Big Data” has slowly fizzled out from our vocabulary. There was a time, though, in the early 2010’s when it was all the rage. But make no mistake: “Big Data” is still here and it is not going anywhere. Behemoths like Google or Facebook (or totalitarian regimes like China, for that matter) still use unfathomable amounts of data to get insights and distill knowledge from billions of data points that people leave behind like breadcrumbs as they live their everyday lives.

Most Machine Learning (ML) algorithms, and especially modern Deep Learning algorithms, are data hungry. The need for data is a pain that most of us ML practitioners feel every day as we work on developing models that are able to perform interesting and novel tasks.

Sometimes this really feels like the famous scene from the Marx Brothers movie “Go West” when Groucho frantically shouts “Timber!! Timber!!!” as Harpo desperately dismantles the train they are riding to keep feeding the raging machine.

However, getting to be in a position of being able to gather and leverage such large amounts of data is a pipe dream for most of us. This is an arms race that we lost a long time ago.

After a decade of seeing the “magical things” these entities have been able to do with all the world’s data, a lot of us have been, over time, conditioned to think that the more data one has the better (always). Or even worse, that without having all the data there’s no point in trying.

We, at Codeq, are certainly not in a position to have access to all the world’s data. As a startup, we have understood this constraint since inception and learned to use it as a strength, not a weakness.

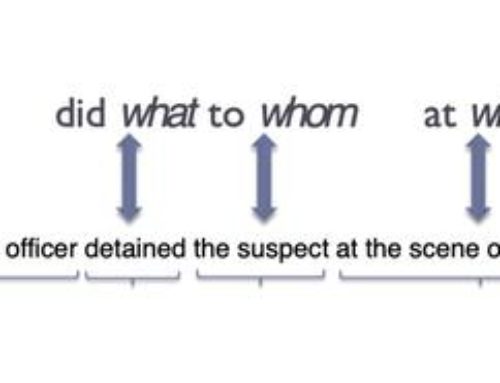

Instead of leveraging “Big Data” to train our Natural Language Processing (NLP) models, we rock “Small Data”. Some might argue, though, that is actually not the case. They might say that what we face is the problem of “lacking enough data”. And this is true to some extent and we certainly take advantage of ML learning techniques like “Knowledge Transfer” to alleviate this issue. However, there’s a human factor in the form of domain expertise that Codeq has taken advantage of for years to build the wide range of NLP solutions that we offer via our NLP API.

If, as Martin (Lindstrom, 2016) points out, “Big Data” is primarily a quantitive approach to understanding and solving problems, “Small Data” is fundamentally more of a qualitative approach.

“[…] you should be spending time with real people in their own environments. That, combined with careful observation, can lead to powerful […] insights.” (Dooley, 2016)

Obviously, this does not translate perfectly to what we do at Codeq, but it talks about the care and attentiveness that we put into understanding the data we use to train our NLP models.

Even though we make the most of some freely available NLP datasets, in many cases datasets for the tasks we want our models to perform do not exist or the available ones are prohibitively expensive, as (Arsenault, 2018) also points out.

As a team of computational linguists, we leverage our domain expertise, and spend a lot of time not only collecting these datasets, but analyzing and understanding them, identifying where they shine and where they fall short. This allows us to tailor datasets to our users’ needs and to be in closer touch with what I call our on-the-ground reality. Or as (Wilson & Daugherty, 2020) put it:

“Mastering the human dimensions of marrying small data and AI could help make the competitive difference for many organizations, especially those finding themselves in a big-data arms race they’re unlikely to win.”

Through my career, I’ve seen many times the results of having the opposite approach, of not understanding (because they’re too big to be deeply understood) the datasets being used to train ML models (anyone remember Google’s image recognition fiasco back in 2015?)

(Raiden, 2020) elegantly explains why this is to be expected:

“The more data you have, the more likely it is you do not understand its context. It doesn’t speak for itself.”

However, small(er) datasets give ML practitioners, willing to get their hands dirty by thoroughly studying and massaging their datasets, the ability to better understand their models’ predictions and behavior.

At Codeq we love what we do and I truly believe that shows by the way we approach NLP and ML.

So come play with us at our small NLP boutique shop and give your projects superpowers with our NLP API.

References

Boscacci, Robert (2018). “Small Data, and Getting It”. https://towardsdatascience.com/small-data-and-getting-it-4b3ed795982c. Last accessed January 8th 2021.

Arsenault, Bradley (2018). “Why small data is the future of AI”. https://towardsdatascience.com/why-small-data-is-the-future-of-ai-cb7d705b7f0a. Last accessed January 8th 2021.

Dooley, Roger (2016). “Small Data: The Next Big Thing”. https://www.forbes.com/sites/rogerdooley/2016/02/16/small-data-lindstrom/?sh=3ed35b497870. Last accessed January 8th 2021.

Lindstrom, Martin (2016). Small Data: the Tiny Clues that Uncover Huge Trends. Picador Paper

Raiden, Neil (2020). “Does small data provide sharper insights than big data? Keeping an eye open, and an open mind”. https://diginomica.com/does-small-data-provide-sharper-insights-big-data-keeping-eye-open-and-open-mind. Last accessed January 8th 2021.

van Rijmenam, Mark (2013). “A Short History Of Big Data”. https://datafloq.com/read/big-data-history/239. Last accessed January 8th 2021.

Wilson, James H. & Daugherty, Paul R. (2020). “Small Data Can Play a Big Role in AI “. https://hbr.org/2020/02/small-data-can-play-a-big-role-in-ai. Last accessed January 12th 2021.