Problem: Staying informed in a rapidly moving news cycle is overwhelming

Solution: Use machine learning-powered text summarization and analysis to manage information

We live at a time where staying informed is a necessity. However, what it means to stay truly informed can be challenging to define. This is especially the case due to both the ever-increasing volume of information as well as its wide scope of variability. As such, an average person is not usually able to allocate extensive time to following the news and keeping up to date with regard to all their interests. Many of us are thus continuously threatened to be left behind, to varying degrees. As difficult of a challenge as these issues of information overload pose, advances in natural language processing (NLP) promise to play a greater and more important role in solving them. More specifically, extractive summarization, the area of NLP focused at automatically extracting the important parts of a given piece of text (e.g., a news article) can help us save time and efforts by reducing the amount of data we need to consider to keep abreast of the state of the world. In this article, we describe our efforts to build a technology that does exactly that for the news domain: i.e., identify a large pool of information sources from which to continuously update a platform where this information is automatically summarized and presented to the end user. Capsule is the name of the software we describe, and as its name suggests, the goal behind the technology is to gather and encapsulate what is otherwise scattered and diluted.

If it were at all possible to sum up where Capsule is coming from and why it was built, the proverb “necessity is the mother of invention” is what first comes to mind: The real need to reduce the difficulty of remaining sufficiently informed is what prompted the team behind Capsule to build the technology. It was especially our justified dissatisfaction with the personal summarization tools we were using in our daily news digest that triggered our thinking about solving this pressing information need. From the very beginning, a vision that summarized texts can be simultaneously informative and supportive of pleasant reading experiences guided our efforts. News summarizers do not have to produce incoherent texts that end up distracting rather than informing. Nor does the technology have to be restrictive with regard to the original sources from which the stories are derived. We needed a technology that transcends the information gatekeeping we may be voluntarily or involuntarily subjected to, one that brings to our attention what we would have otherwise missed as a result of our unconscious reading selection from among only few news sources. We also needed a natural language technology that produces texts that are truly composed of coherent and natural language like what we humans produce; summaries that wouldn’t be identified as produced by machines but rather by humans.

Combining the Best of Two Worlds: How Capsule was Built

It can be argued that the best performing natural language technology systems today are ones that implement cutting-edge artificial intelligence (AI) algorithms on huge amounts of data. Classically, numerous systems have also been primarily functioned using rules based on linguistic insights. In Capsule, these two worlds of AI and linguistic motivations meet: We model language after the human brain, using use state-of-the art deep learning methods and substantiate our work in text linguistics. We now sketch each of these fronts:

Deep Learning:

We treat language units as words not as atomic symbols as has been the practice in NLP for years, but we embed them (Bengio et al, 2001; Bengio et al, 2003) based on the concept of distributed representations for symbols (Hinton, 1986). Recent approaches to learning distributed representations of words (Mnih & Hinton 2007, Collobert & Weston 2008, Turian et al., 2010; Collobert et al., 2011, Mikolov et al., 2011) have achieved remarkable successes. We employ a class of probabilistic word embeddings called Hellinger PCA (Lebret & Collobert, 2013; 2015) that learns words via their co-occurrence matrixes as acquired from very large corpora (e.g., whole English Wikipedia). This approach has been shown to achieve state-of-the-art results on many tasks and we find it highly successful in many Capsule internal tasks.

Linguistic Motivations:

In order to account for readability of extracted summaries, we use theories from functional and text linguistics to guide development. In particular, we overcome problems with summary coherence via identifying inter-sentential linguistic devices and inhibiting their use in undesirable ways in extracted texts. Importantly model coherence without discarding these parts of texts that would otherwise disrupt global summary coherence via use of nuanced machine learning connectives that fire in certain places in the overall architecture but not in others.

Performance:

To ensure Capsule returns summaries that are on par with user expectations, we performed human evaluations of its output against output from the Yahoo! News Summarizer since we had access to the latter. We evaluated Capsule performance against the Yahoo! News Summarizer on a total of 232 summaries. We asked five human coders with native English fluency and post-college education to perform the evaluation. For each summary, the human judge was presented with 1) the original story title, 2) the original story URL, 3) summary A (Capsule), and 4) Summary B (Yahoo! News Summarizer). As such, the human coder had enough information to check the summary against the original story it is extracted from, and the task was to choose which summary is better based on the overall coherence, informativity, and the extent to which the summary is a representation of a given story headline. Our evaluations show that Capsule performs much more favorably than the Yahoo! News Summarizer.

A Brief Description of the Pipeline/Some Component Technologies:

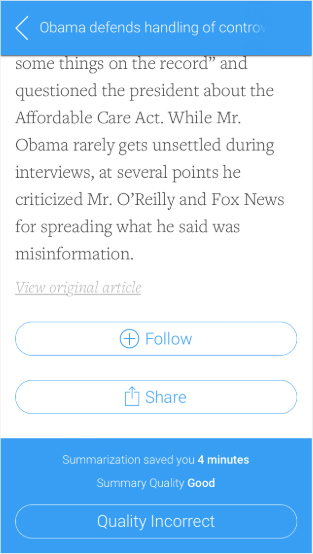

Another confounding factor is that many of us, when they do read the news, primarily do that on their phones and hence need to exert greater efforts to make intelligible short texts on small screens. What makes it even more difficult is that many news summarizing apps are superficial tools that extract sentences and glue them together, without much coherence nor deep understanding of the language of summaries. As such, we end up with summaries that are incoherent, uninformative, unenjoyably distracting, and sometimes unnecessarily longer than what we wish. At Codeq, we have identified what users really need when they turn to their phones or tablets to read the news, be these needs related to the types of stories, the quality, informativity, length of summaries, usability of interfaces, or the update rates of the stories provided, to count a few. In this article, we describe how we have used deep advanced natural language processing to build a stellar, state-of-the-art news summarizer that yields results more on par with user expectations than the available industry technology, including the Yahoo! News Summarizer.

Single Document Summarization:

Given a news story, Capsule will filter out copies judged as a duplicate of the same story and return a summary of the document. In this way, Capsule is useful in saving the user time not only in that it summarizes documents, but also as it reduces the space of news stories by removing duplicate articles.

Linguistically-Motivated Extraction:

Capsule mobilizes a significant body of linguistic literature to yield highly coherent and strongly informative summaries. Capsule’s linguistically-motivated summarization computes information from both a news story title and body text: On the one hand, an article’s title encapsulates the gist of the story, which is then unfolded in the body, and hence carries important semantic information that should not be ignored in the output summary. On the other hand, the title is often times short and does not tell as much as a reader may want to know about the content of an article, and hence we build Capsule to take into account not only the title but also each single sentence in the body text. In this way, we cater for higher informativity in the summary. In order to ensure optimal informativity, however, not all sentences are treated equally. In other words, the stream of body sentences undergoes deep linguistic analysis to decide its informativity and contribution to overall coherency. Capsule then proceeds toward building the global model that ensures an easily readable, informative, and highly coherent summary output.

We now turn to explaining how our models caters for informativity and coherence.

Informativity:

To decide the informativity of a given sentence, Capsule assigns a binary label from the set {informative, non-informative}. Only sentences that are boilerplate are rejected. Examples of these include the sentence “Click here to read this similar article” and shorter text chunk “See more:…”. Sentences labeled as “non-informative” are removed from the article and hence are not used by the models any further. The decision not to consider boilerplate sentences further in the Capsule models is motivated by the fact that such sentences do not carry any information that would add to the semantic diversity of a summary. It is also noteworthy that, based on our observation, not many sentences end up tagged as non-informative. This is simply the case since people do not use boilerplate sentences all that frequently when they write news stories. News stories, after all, should carry something new to be ‘news.’

Coherence & Cohereability:

Different texts have different degrees of readability. As readers we are always trying to construct a mental representation of a given text. Often times, writers employ a host of linguistic devices to facilitate readability and hence build up coherent texts where users are able to ‘connect the dots’ and simply construct the mental representation needed for grasping what they read (see, e.g., Barzilay & Lapata, 2005: 141; Lauwerse & Graesser, 2005: 217). A summarizer that seeks to generate useful and pleasing texts should benefit from this knowledge of how discourse is created and understood. For this reason, discourse coherence is a concept central to Capsule. We now move to explaining how we cater for discourse coherence in Capsule.

Related to discourse coherence, we introduce the concept of ‘coherability.’ We use the term cohereability to refer to the extent to which a given unit of analysis (e.g., a sentence) can contribute to the overall coherence of a generated summary. For cohereability, we assign a tag from the set {cohereable, non-cohereable} to a news article’s body text sentences. Cohereability tagging takes place after removing informativity decisions as explained above, and hence sentences labeled as non-informative are not considered at this stage. To decide for cohereability, we perform a linguistic analysis on the body text sentences. For each sentence, Capsule, identifies whether or not the sentence carries one of a set of 26 discourse connectors like “moreover” and “however” and if it does, the sentences is labeled as non-cohereable. To explain the rationale behind this further, imagine a summary that starts with the sentence “However, the president said the crisis is going to be solved.” We do not usually see articles starting that way, and hence no summary should start that way. If it does, it will not be pleasing to a reader. In other words, sentences that include discourse connectors can negatively impact the global coherence of the summaries because the extraction of these sentences breaks the above-mentioned sentence-to-sentence transition of ideas and concepts that these markers articulate locally within the news articles. For this reason, non-cohereable sentences are excluded from the final extracted summary. Since these sentences do carry important semantic information, however, they are still used in measuring similarity.

Capsule makes use of a number of linguistic devices to identify non-coherable sentences, as follows:

– personal pronouns: e.g., ‘he’, ‘his’, ‘she’, ‘her’

– demonstrative pronouns: e.g., ‘that’, ‘this’, ‘those’, ‘these’

– adversative conjunctions: e.g., ‘but’, ‘however’, ‘However’

– concessive conjunctions: e.g., ‘so’, ‘thus’, ‘although’

– coordinating conjunctions: e.g., ‘and’, ‘or’

– adverbial discourse connectors: e.g., ‘now’, “immediately”, “straightaway”, ‘meanwhile’

– metadiscourse connectors: e.g., ‘below’, ‘latter’, ‘above’

– discourse markers: e.g., ‘instead’, ‘hence’, ‘otherwise’, ‘likewise’

– temporal adverbs: e.g., ‘now’, “immediately”, “straightaway”, ‘meanwhile’

– interrogative pronouns: e.g., ‘who’, ‘what’, ‘how’, ‘where’, ‘which’

Strongly rejected candidates:

Strongly rejected sentences are sentences that do not contribute to the concepts and ideas of that the news article elaborates. Thus, these sentences could be considered discourse boilerplate.

Linguistic markers that signal strongly rejected sentences are:

– Gratitude nouns and verbs

– Greetings exclamations

Document Categorization:

We use advanced natural language processing technologies to categorize the news space into 9 main categories: U.S. News, World News, Business, Technology, Politics, Sports, Entertainment, Science, and Health. This thematic grouping of news stories is meant to appeal to user interests and provide an easy, usable, and quick access to articles of interest to different audiences.

Machine Learning:

We use supervised machine learning to train classifiers that are capable of learning the different news categories, teasing apart the space into 9 different thematic groupings as mentioned above.

Evaluation:

We have performed very rigorous human evaluation on the resulting Capsule summaries: A total of 232 Capsule summarizes were provided to five human coders with native English fluency and post-college education. For each summary, the human judge was presented with 1) the original story title, 2) the original story URL, 3) summary A (Capsule), and 4) Summary B (Yahoo! News Summarizer). As such, the human coder had enough information to check the summary against the original story it is extracted from, and the task was to choose which summary is better (based on what? overall coherence, length?, informativity? all?)

Labels from each pair of the human judges were acquired and compared.

A Life Preserver in a Sea of Information

The modern news cycle moves faster each day than it ever has before. It’s difficult to stay afloat with so much information assaulting your senses on an hourly basis. We simply don’t have the time required to read every salient piece of news that affects our lives. With Capsule and Extractive Summarization, the area of Natural Language Processing that automatically summarizes the most important parts of a text, we can keep our heads above water by significantly cutting down the amount we need to read in order to stay informed.